Back to all insights

Lack of organizational readiness is making your AI pilots stall

Amir Ouki

Managing Director,

Applied AI & Technology

Many companies assume that once a model is working technically, it’s ready to be deployed across the organization. But when AI solutions stall, it’s rarely because the algorithm failed; it’s usually because the organization wasn’t ready to support it.

Successful scaling depends as much on people, roles, and processes as it does on infrastructure. And in most companies, organizational readiness is the weakest link. In this article, we’ll break down what organization readiness really means, and how to fix any gaps before they make your latest AI build fail at scale.

Dive deeper with our webinar on “Scaling AI from POC to business-critical products”

Why AI solutions fail after launch

Even technically sound AI systems often struggle once they leave the lab. Why?

In other words, the model might work, but the system doesn’t.

Why you need an AI Strategy: 4 barriers preventing you from capturing value

Problem 1: No one owns the solution

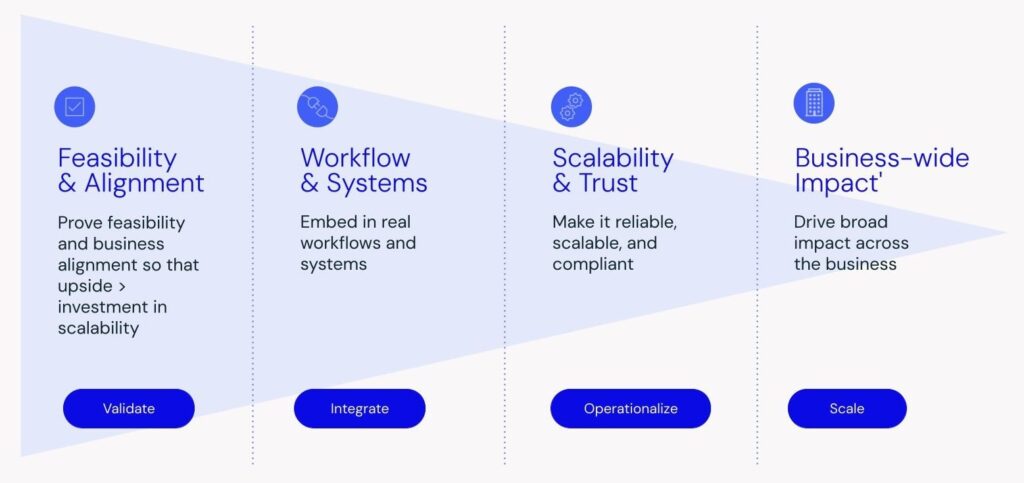

This first stage is often confused with technical prototyping. In reality, it’s broader. The goal isn’t just to prove that the model works, but to validate that scaling the solution would deliver enough business value to justify the investment of time and resources.

Why this happens

AI pilots are often owned by innovation or data teams. But once the proof of concept ends, there’s no clear handoff or owner in the business. The tool will float in limbo, no one will update it or monitor it, and no one will be accountable for outcomes.

What to do about it

Build ownership into the team structure from day one:

Problem 2: The right roles don’t exist yet

Why this happens

AI introduces new workflows and skill sets that don’t fit neatly into traditional organizational charts. Many teams don’t have the right capabilities to support AI systems after launch.

Common missing roles include:

AI product managersThey must bridge the gap between users, engineers, and data scientists

Model Ops engineers

Deployment, retraining, versioning, and monitoring must be handled continuously

Prompt engineers

Because Ai is an evolving capability, generative models need to be fine-tuned and maintained for specific contexts

Data stewards

Data quality, lineage, and compliance must be thoroughly monitored and governed

What to do about it

Scaling requires organizational design and team capability, not just model performance. Define these roles clearly, even if they start as part-time functions, and staff them early in the lifecycle. Think of them not as investment into a specific capability but as the connective tissue that allows AI to operate across the business.

Problem 3: Business teams don’t understand the solution

Why this happens

If business users can’t understand the logic behind an AI system or they don’t trust it, they’ll ignore or resist it. Trust and explainability aren’t just compliance checkboxes, they’re adoption drivers.

This is especially true when the model generates non-binary outputs, like recommendations or risk scores. Without transparency, users default to their old workflows or override model outputs, defeating the purpose.

What to do about it

Problem 4: AI isn’t designed to work with existing workflows

Why this happens

Most enterprise tools are deeply embedded in workflows. If an AI solution requires users to open a new interface, change context, or take extra steps, it often gets ignored. AI must integrate into how people already work.

What to do about it

Design for workflow compatibility from the start. Avoid building “AI tools” that sit on the side, embed AI into the tools teams already trust:

Problem 5: The culture isn’t ready

Why this happens

AI adoption requires a mindset shift from project-based thinking to capability-based thinking. But many teams still see AI as something “extra” or experimental. That creates friction, fear, or fatigue when it’s time to operationalize.

What to do about it

Shift the culture by:

This is where an AI Center of Excellence can play a critical role: supporting local teams, building shared infrastructure, and driving enterprise-wide fluency.

A CoE isn’t just a hub of technical experts, it’s an enablement function that helps operationalize AI across the organization. It sets standards for model development, deployment, and monitoring.

It offers shared services like model registries, embedding stores, or compliance toolkits that individual teams can build on.

Critically, it also acts as a bridge between business units and technical teams, helping translate use cases into scalable architectures and ensuring AI adoption isn’t limited to early adopters. The CoE doesn’t own every solution, it enables others to build confidently and responsibly.

Why a bottom-up approach to AI is doomed to fail

AI isn’t just a technical upgrade, but an organizational one

You can have a robust model, clean data, and perfect infrastructure, but if no one owns the system, understands it, or integrates it into daily work, it will never scale. Here’s what scalable AI organizations invest in (apart from the tech):

Looking to build an AI solution that delivers real business value at scale? Let’s talk.

Amir Ouki

Managing Director, Applied AI & Technology

Amir leads BOI’s global team of product strategists, designers, and engineers in designing and building AI technology that transforms roles, functions, and businesses. Amir loves to solve complex real world challenges that have an immediate impact, and is especially focused on KPI-led software that drives growth and innovation across the top and bottom line. He can often be found (objectively) evaluating and assessing new technologies that could benefit our clients and has launched products with Anthropic, Apple, Netflix, Palantir, Google, Twitch, Bank of America, and others.

More resources on AI Build

Scaling AI from POC to business-critical products

How to design and build AI solutions

What scaling AI actually requires: 4 stages

The world’s largest summit for AI innovators returns in December

Tickets available now

The post Lack of organizational readiness is making your AI pilots stall appeared first on BOI (Board of Innovation).